- Ai researches

- Posts

- 🚨 Stargate: $100 Billion Bet on AI

🚨 Stargate: $100 Billion Bet on AI

Plus, OpenAI's NEW Voice Engine

SIGN UP HERE

Welcome, AI RESEARCHERS.

Microsoft and OpenAI embark on a $100 BILLION mission

A new supercomputer, codenamed "Stargate", could reshape the future of AI.

This massive undertaking aims to push the boundaries of artificial intelligence capabilities like never before.

In today’s AI Researchs:

The AI Power-Up: Grok-1.5

Microsoft, OpenAI Eye $100B "Stargate" AI Supercomputer

Realistic Voice Synthesis Now Easier with OpenAI

For Data-Guzzling AI Companies, the Internet Is Too Small

How to Use Notebook LM for Content Creation

5 top AI tools for developers

More Ai hits & news

LLMs Hit a new Learning Plateau

Read time: 5 minutes

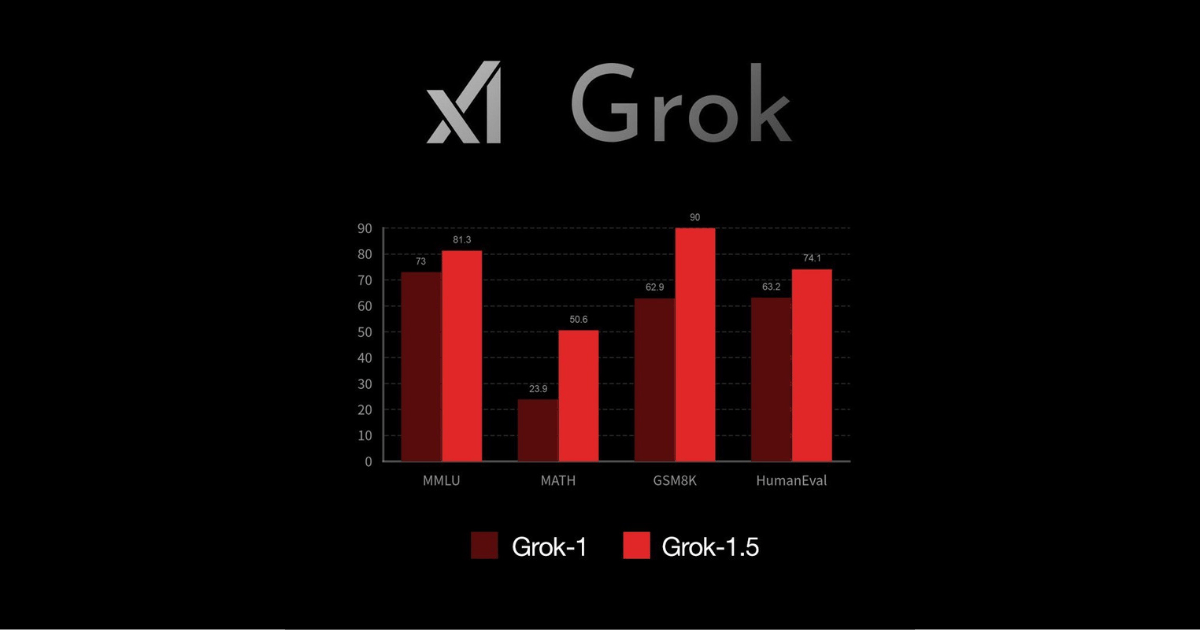

GROK 1.5

AI Researchs: X.ai's updated language model, Grok-1.5, demonstrates significant advancements in understanding longer text, complex instructions, and solving math and coding problems.

For more details:

Enhanced Reasoning: Grok-1.5 outperforms its predecessor in math and coding tasks, evident in its scores on recognized benchmarks (MATH, GSM8K, HumanEval).

Long Context Understanding: The model can process significantly longer text (up to 128K tokens), making it better suited for intricate prompts and utilizing information from larger documents.

Robust Infrastructure: Grok-1.5 relies on a custom training system (JAX, Rust, Kubernetes) for reliability and flexibility in development and training.

Availability: it should be available on next week on 𝕏 and Grok 2 should exceed current AI on all metrics. In training now, as from elon.

Why it matters: Grok-1.5's improved reasoning and ability to handle long contexts expands the model's potential applications. This enhancement is essential for tasks requiring complex logic and the ability to draw insights from vast amounts of information.

the skynet

Image source: Getty images

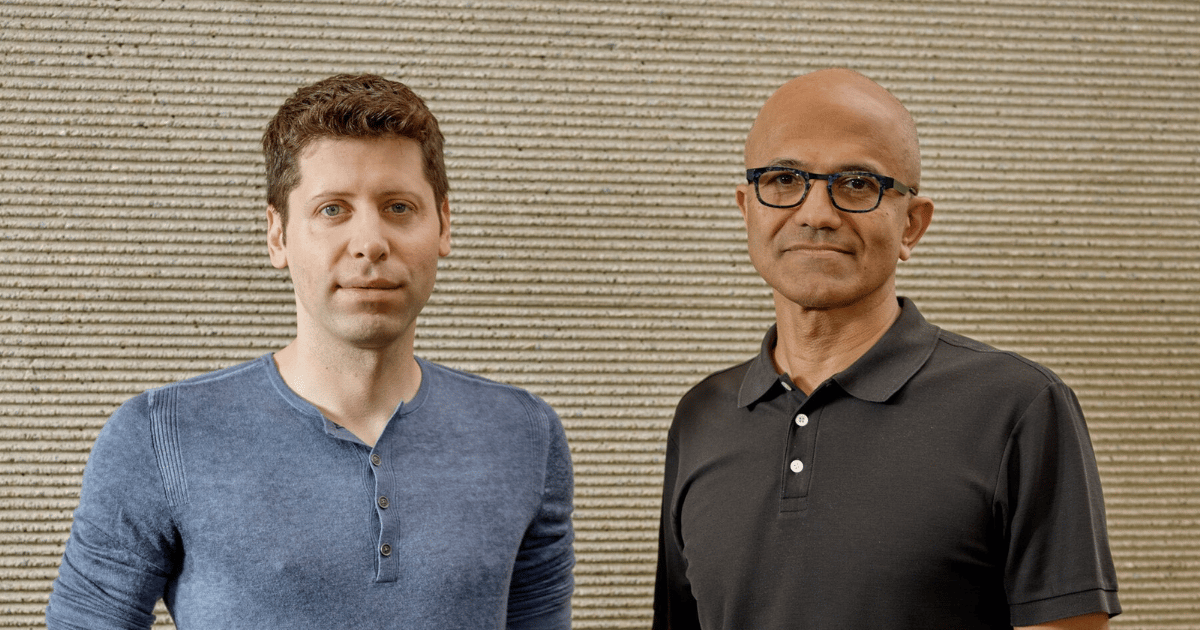

AI Researchs: Microsoft and OpenAI are planning a multi-phase, $100 billion project to build a series of AI supercomputers, with the proposed "Stargate" as the pinnacle. This initiative aims to significantly advance large-scale AI capabilities.

The important details:

Project Goals: Create a series of increasingly powerful AI supercomputers, culminating in "Stargate".

Collaboration: Microsoft to provide financing, with OpenAI contributing technological expertise.

Timeline: The project is planned in five phases, with smaller supercomputers leading up to the Stargate launch, potentially by 2028.

Cost: Anticipated to exceed $100 billion, driven by infrastructure and next-generation AI chip procurement.

Focus: Push the boundaries of current AI capabilities and potentially transform various industries.

Why this matters: This supercomputer could dramatically speed up the development of large AI models. This has the potential to unlock breakthroughs in fields like healthcare, scientific research, and personalized experiences. Careful attention to the ethical use of this technology will be essential.

OpenAI voice Engine

AI Researchs: OpenAI's new Voice Engine technology can generate a realistic synthetic voice replica using just a 15-second audio sample. The technology is currently in limited access for responsible development.

The important details:

Voice Engine's capabilities: Can mimic a person's voice and translate speech into other languages upon command.

Responsible development: OpenAI is prioritizing safeguards due to the potential for misuse.

Partner use cases: Education technology, personalized instruction, healthcare communication, and accessibility tools.

Audio Analysis: OpenAI provided audio samples demonstrating the model's accuracy.

Government action: The US government is taking steps to prevent malicious use, such as the FCC ban on AI-voice robocalls.

Why It Matters: This technology has the potential to be both beneficial and harmful. OpenAI and its partners are working carefully to ensure ethical development and use, as deepfakes and impersonation risks could pose challenges for authentication and trust.

AI NON-QUALITY FUTURE

Image source: Screenshot via WSJ

AI Researchs: AI development faces a potential roadblock: the internet may not have enough high-quality data to sustain the training of increasingly powerful AI models.

For more details:

The AI Data Diet: Large language models (LLMs), the technology behind tools like ChatGPT, learn from massive amounts of text and code. The more data they consume, the better their ability to generate human-like responses.

Approaching the Limit: Experts predict the demand for high-quality text data may exceed online supply within the next few years. Without more data, progress in the AI field could stall.

It's Not Just Quantity: Finding clean, well-organized data is crucial. Much of the internet's content is fragmented or unusable for AI training.

Emerging Strategies:

Companies like OpenAI are exploring using transcriptions of YouTube videos.

AI-generated (synthetic) data is a potential solution but poses risks of malfunctions.

Tech companies aim to refine data selection techniques for more efficient model training.

Why it matters:A lack of data will limit the abilities of future AI applications. This has the potential to limit future advancements benefiting various industries and aspects of life.

AI AT WORK

Using Notebook LM for Content Creation

What it Does:

Organizes your thoughts: Uploads your notes (Google Docs, PDFs, text) and lets you ask questions to find insights within your research.

Generates ideas: Provides new angles, connections, and helps you brainstorm based on the content you've provided.

Creates outlines: Takes your notes and questions to help structure your final content like a script or blog post.

How to use it:

Upload your research: Add sources relevant to your topic.

Ask questions: Type what you want to know about your notes or ask for specific insights.

Pin important answers: Save useful answers to build your content outline.

Generate your outline: Use Notebook LM to suggest an outline for your final piece.

✅ 5 must have AI tools for designers in 2024:

💻 Reom: AI-powered website builder that helps you create sitemaps and generates full-fledged wireframes.

⚙️ Slater: AI code generator that writes custom code to help you add complex functionality to your Webflow projects.

🎨 Midjourney: AI tool that creates stunning custom images to elevate your website designs.

🖼️ ChatGPT (Pro version): AI assistant that generates images based on your descriptions and lets you provide feedback to refine the results.

🔍 Magnific: AI image upscaler that significantly improves the resolution and detail of AI-generated images.

Meta's AI-powered features are coming to Ray-Ban smart glasses next month, initially rolling out to US users on an early access waitlist.

Amazon intends to invest nearly $150 billion over the next 15 years in data centers to manage the anticipated surge in demand for AI applications.

OpenAI introduces a powerful image editor within its DALL·E platform. Users can now add, remove, or change elements within existing AI-generated images using natural language prompts.

The National WWII Museum's new "Voices of the Front" exhibit uses AI to preserve the memories of World War II veterans, allowing visitors to ask questions and receive answers for a unique conversational experience.

Japan and the US announce a framework for joint research and development in AI, partnering with tech leaders like Nvidia, Arm, and Amazon, signaling a deeper technological alliance.

OpenAI establishes a new presence in Asia with the opening of its Tokyo office as part of the company's broader international growth strategy.

Cognition Labs, an AI startup called Devin established in November, aims for a $2 billion valuation despite warnings of a potential AI bubble.

Google.org launches a $20M accelerator program to support nonprofits leveraging generative AI, aiming to streamline social impact initiatives through AI-powered tools.

LLMs BREAKTHROUGH

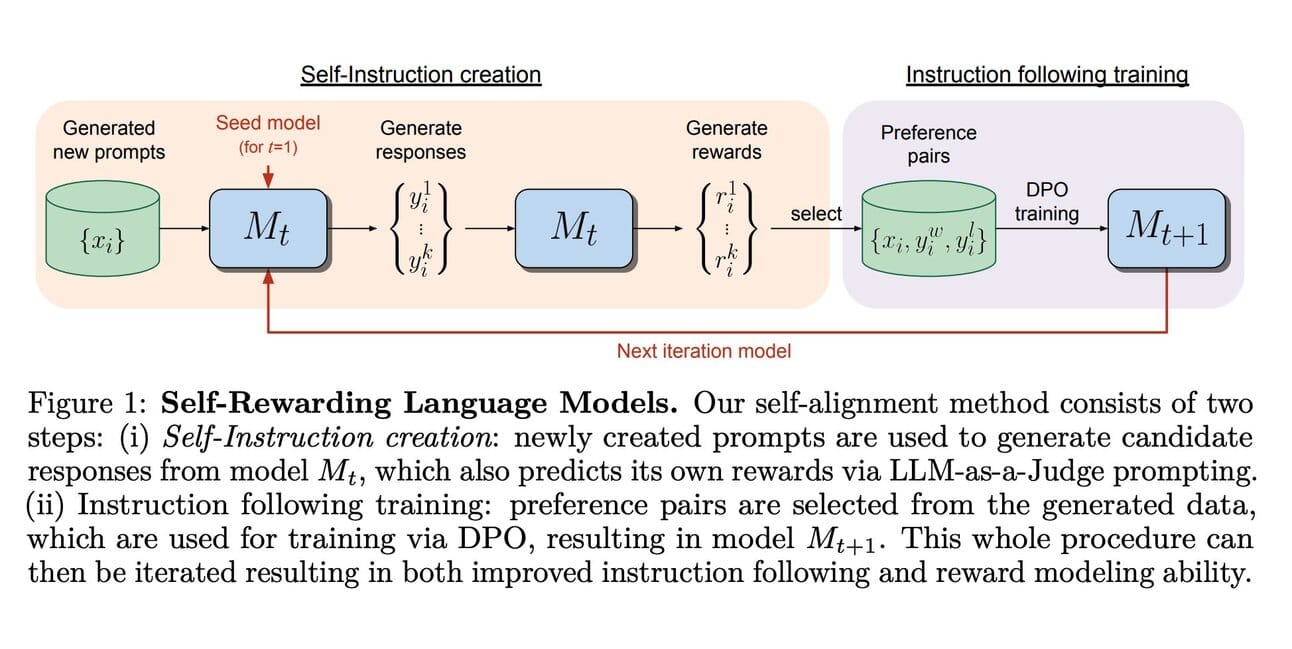

Ai Researchs: Research suggests large language models (LLMs) may have a ceiling on how much they can improve by themselves. Studies indicate a slowdown in improvement after just a few rounds of self-training.

For more details:

Iterative Self-Improvement: LLMs can learn by proposing prompts, crafting responses, and then self-evaluating their output.

The Saturation Point: Despite initial progress, most models reach a performance plateau after approximately three rounds of self-training.

Critic vs. Actor: It's theorized that the model's ability to evaluate (the "critic") lags behind its ability to generate (the "actor"), limiting further improvement.

External Signals Needed: Researchers suggest that external inputs like theorem verification or specific test suites might be needed for ongoing, general-purpose

Why it matters: This research highlights a potential bottleneck in the quest for ever-improving AI with general-purpose capabilities. Understanding this plateau can inform future research directions to break through this limitation – a key step towards even more powerful language models

A Quick thank you! for Reading

We appreciate you taking the time to read our newsletter! Your feedback is important to us. Please feel free to reply directly with your thoughts and suggestions

Reply